Future Automobile Interactions: Speculative Design with Emerging Technologies

January 2021 - May 2021 | 12 Weeks | Solo Project

This was a open prompt, self driven project to demonstrate my design skills and knowledge especially with Interaction Design (it being my specialization). I picked the automotive space.

SCOPING & RESEARCH

- Started with the idea of a google maps add on transitioned designing interactions for future cars.

- Non-traditional methods of research such as looking through movies and media on what they portray the future of automotive to be.

- Found what the car manufacturers are working on for future cars/ concept cars.

WHAT I ENDED UP WITH

I ended up designing interactions in future automobiles with a focus on Somaesthetics which is understanding how our body perceives things and relies on habits to make decisions.

- Created physical prototypes to demonstrate use-cases of my solution

- Created storyboards and user journey maps to contextualize the solution.

Read more in detail and check out the prototypes below!

Where it all started?

My idea for the project started off with my love for driving and road trips. On the road trips I have whenever a vehicle tailgates me, I am unsure what they are expecting from me to do.

My idea started off with designing a solution for cars to be able to communicate their intentions to the cars in their immediate proximity.

Based on feedback, I transitioned into designing in a more speculative space. This allowed me to design with safety and ergonomics being a less of a constraint.

The Final Scope

I decided to design two interactions as my project. My goal was to design interactions which have a reasonable growth curve through the timeline I had set.

First one is intended for near future (2035) with the goal of making the driver feel safe

The second one is intended for far future (2045) with the goal of bringing in Play and Nostalgia to driving.

My goal was to create these two interactions keeping a sense of growth curve between them.

Research

Since my project was in a speculative space, I researched into what car manufacturers are imagining their future.

Companies like Toyota, BMW, Renault, Mercedes Benz have put a lot of resources in imagining the future of transportation.

I found emerging technologies like AR displays being consistently being projected as the future of transporting.

I also looked into how Hollywood imagines humans will interact with our cars in the future.

The retracting steering from iRobot is a pretty accurate depiction of the future being driving becoming optional. This was reinforced by my previous research of concept cars.

Initial Ideation

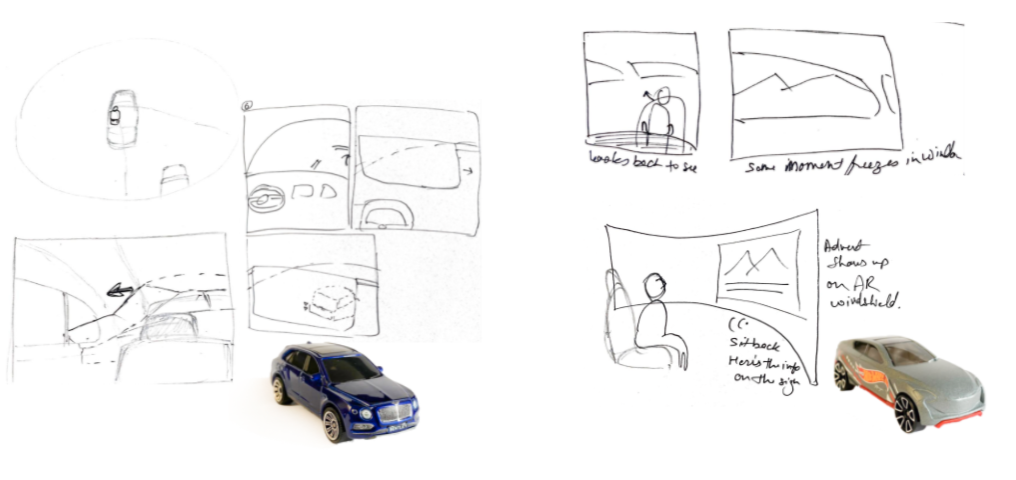

I had several rounds of ideation which initially started with looking to screen based solutions.

As I was moving into a speculative space, I decided to ditch designing for simple screens as it would have been an app/add-on of some sort.

Instead, I focused on emerging technologies such as AR glasses (Apple glass) and future car glass which are supposed to be AR displays.

I also found myself looking interactions that are a result of certain body motions that we only do when we are in a vehicle. This led me into researching on Somaesthetics.

This (the animation on the right) was one idea how meaningful interactions could be triggered upon certain body motions:

In this case checking out a car next to you reveals the car’s intentions on whether it’ll turn or keep going.

“Somaesthetics is an interdisciplinary field of inquiry aimed at promoting and integrating the theoretical, empirical and practical disciplines related to bodily perception, performance and presentation.”

Somaesthetics drove my ideation further as I created multiple versions of my prototypes and tested them. I created physical prototypes to better represent scenarios and present them to my audiences.

Observational Research

To find opportunities for ideating in the space, I decided to watch videos of people driving on youtube and make notes of people’s movements so I could draw patterns.

Final Two Interactions a.k.a. Story Time!

Steve starts a family and a startup around 2024, by around 2035, he has bought a Level 3 autonomous cars like a Tesla. The car has a lot of safety built into it. At this point in time Steve also is using Apple Glass pretty regularly as they have become a common alternative to the smartphones of yesteryears. The first Idea 1 (first car on the journeymap) comes into play here.

Interaction 1

Steve recently got this car so he is still pretty used to turning his head to check his blind spots, the intent is to train his body to not do that and trust the car.

When he turns to check blind spots (4), the glasses detect said movement and show through the car body (5) to reassure Steve but the speakers also playfully mock Steve for being worried (6). The glasses augment a fake car in the blind spot with a poof and reassures Steve (7) that the car wont turn if there was a car.

Feedback I received suggested mixed feelings on the “mocking” piece of the interaction. Upon further contextualizing it as just a laugh and not being “mean” it was better received.

In the second half of the journey, in 2045, Steve now has a fully autonomous vehicle and most cars on the roads are too. Car “glass” in the windows and windshields are essentially AR displays.

Interaction 2

Steve is now traveling with his kid. In a fully autonomous car, driving is essentially lounging. So Steve isn’t paying attention while his child is looking around. The child asks Steve what something on the left side of the landscape was.

As Steve turns to check out the point of interest (in the same motion as he would have to check blind spots), The car freezes that moment in those window AR displays so Steve can have a conversation in the moment with his child about said point of interest. Once the conversation is over, Steve gets back up to speed as he turns his head back to looking ahead as explained in the 2nd idea

Blue trapezoidal shapes are showing what the user is looking at at the moment.

Reflect in the moment

At 0:00, the user is being driven and passes a point of interest, at 0:45, the user turns his head to take a longer look at the POI. The AR windows freeze the frame and allow the user to spend longer looking at the POI while the car keeps driving away as seen at 1:35. The user sees exactly the same thing at 0:45 as 1:35 through the window.

Blue trapezoidal shapes are showing what the user is looking at at the moment.

Post Reflection

Once the user is done reflecting on the moment while in the moment, the user gradually turns his body back to looking ahead. During the gradual turn of body/head, the AR displays gradually speed up what's on the window displays to finally get up to live speed when the user is looking ahead as status quo.

At 1:45, the user has started turning head back to looking forward and the “video” on the AR display.

At 1:55, the display speeds up the video to catch the user up with what the car drove past while the user was looking at POI

By 2:00, the user is almost looking straight and is caught up and the displays are not augmenting anything and everything is real time.

Final Thoughts

When starting off on the project, I was pretty hell bent on designing this iPad screen app showing what cars are about to turn left or right. But I realized that as a designer working on one of my final design projects in school, I should do something a bit more bold and not obvious. I am sure Elon Musk and Tesla will come up with something like this very soon so I wanted to push my boundaries into a speculative space.

I came away with designing a couple of meaningful interactions which I could really see becoming real to some degree in the future. I am especially proud of the quality of storytelling I could do using very basic diorama style prototypes once again pushing my traditional self prototyping physically instead on Figma. I also realized how much I enjoyed working on this project which suggests my affinity towards working in the space eventually as a professional.